Well, at least here we can talk about Voldemort without evoking him, right? (Unless this shit is like Betelgeuse - on the third time you mention his name, he pops up to ruin your day.)

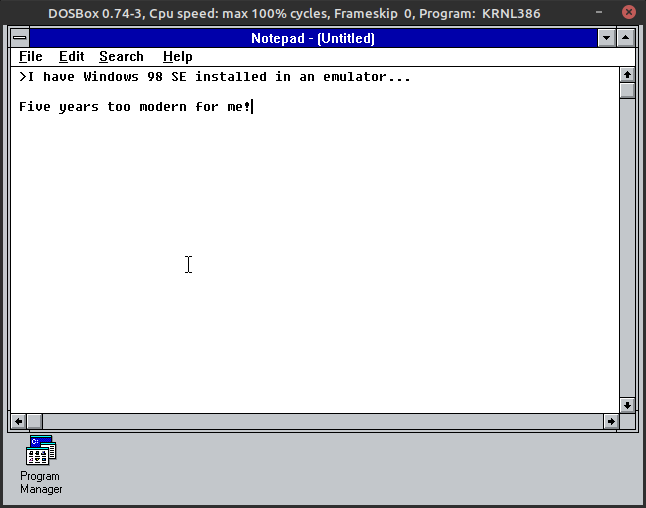

Okay, serious now: that’s sensible since in r/brasil it would be basically advertisement.

I’d consider growing those. Mostly to blend alongside some extremely hot pepper, as then I can control the heat properly.